Ask someone what an algorithm is today, and you’re likely to hear: “That’s what powers ChatGPT,” or “That’s what TikTok uses to recommend videos.” In the public imagination, the word algorithm has been almost completely consumed by one thing: AI.

But long before neural networks and machine learning took over the conversation, algorithms had a different meaning—a broader, more fundamental one. They were the logical, step-by-step procedures/mathematical recipes that powered everything from heart monitors, machine automation to advanced auto-pilot systems. These were built using human reasoning and established signals and systems theory based on hard science. Yet today, it would seem that they are invisible.

Algorithms That Built the Real World

Not all algorithms are probabilistic models trained on massive datasets. Some are mathematical systems designed to perform deterministic, repeatable, and real-time operations:

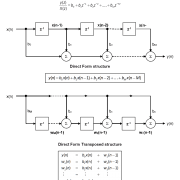

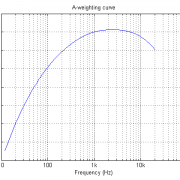

- Digital filters – Based on convolution and implemented via difference equations, these are the workhorses of DSP.

- Z-transforms – The analytical backbone of signals and systems, essential for understanding and designing discrete-time systems.

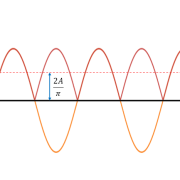

- Fourier analysis – Fundamental for noise cancellation and predictive maintenance applications.

- Control algorithms – From basic PID controllers to Kalman filters used in automotive, aerospace and robotics.

These are not just theoretical methods. They run our phones, cars, aircraft, medical devices, and more. They’re power-efficient, predictable, and interpretable. They’re deterministic algorithms.

What AI Can’t Replace

AI excels at tasks that involve ambiguity, classification, and massive amounts of data. But the world isn’t just made of pictures, text, and social media trends.

Many practical mission-critical applications, such as factory automation robotic systems, automotive cruise control systems, and power plant control systems, all require deterministic real-time fail-safe operation. This can only be achieved with DSP algorithms and Lockstep processor technology.

For these systems, we don’t need probabilistic guesses. We need determinism in the sense:

- Understanding of how the algorithm works, and came to the result.

- Stability guarantees

- Known latencies

- Precise resource usage

- Fail-safe operation (Lockstep TMR technology)

That’s not AI. That’s signals and systems and advanced fail-safe processor technology.

Lockstep Processor Technology for Mission-Critical Systems

Lockstep processor technology ensures redundancy and fault tolerance in real-time applications. Processors such as the Arm Cortex-R4 and Cortex-R5 feature lockstep functionality, where two identical processors run the same instructions simultaneously and compare results.

This approach is especially effective for detecting hardware faults arising from component ageing, environmental factors or EMI. However, since both processors execute the same code, software bugs will not be detected. For true fault tolerance, Triple Modular Redundancy (TMR) can be used, where three processors vote to determine the correct result.

While AI systems operate probabilistically and often lack transparency in failure modes, Lockstep-based designs ensure deterministic execution, immediate fault detection, and predictable safety behaviour. When safety is paramount, Lockstep wins hands down.

ASIL Compliance and Automotive Safety

Lockstep is vital for Automotive Safety Integrity Level (ASIL) compliance. In systems like adaptive cruise control, a processor mismatch will safely disengage the system rather than risk unsafe behaviour. For the most critical applications, such as flight control or nuclear plant monitoring, TMR is used to maintain operation despite single-point failures.

The Disappearance of Craftsmanship

AI didn’t rise because it was superior. It rose because it was easier.

In a world where fewer people understand physics, system modelling, or real-time signal design, it’s far more convenient to train a black box on loads of examples, rather than to derive a model from first principles.

Most younger engineers today struggle with modelling a dynamic system. They don’t really understand the physics of the system, and how to model the dynamics into usable transfer functions or block diagrams needed for implementation. These are further complicated by a lack of knowledge of analog electronics and the effects of noise on data.

So we give them data. And we give them frameworks to grind through that data until a result falls out. And when these AI-driven systems fail, there is no coefficient to tweak. No transfer function to inspect. Only a tangled web of parameters, and the shrug of a retrain.

It would seem that the artistry of algorithm design—once rooted in insight, mathematics and physics—is being replaced by guesswork.

A Place for Both Technologies

AI isn’t the villain! In fact, in many cases, it’s a breakthrough.

There are real-world problems — ones that are too complex or nonlinear to be modelled easily — where AI offers a practical solution. For example, face recognition, gesture detection, and certain chemical substance classification are all examples where traditional signal processing struggles. They are just some of the examples where AI simplifies the unsimplifiable.

But we must remember: just because we can’t model something doesn’t mean we should stop trying. AI should be seen as one of the many tools that can be employed, not a substitute for understanding.

Generative AI, like ChatGPT, is proving invaluable in tasks such as writing and refactoring code — accelerating prototyping and helping engineers quickly develop new ideas. While this is a remarkable technical achievement, it must be viewed in context, as the AI lacks true understanding, common sense, and awareness of the real-world constraints surrounding the problem it’s addressing.

Real-Time Edge Intelligence — Built on Science

The future doesn’t belong to AI alone. It belongs to hybrid systems that combine the best of both worlds.

Real-Time Edge Intelligence (RTEI) is the next frontier: using DSP algorithms grounded in physics and mathematics to extract features of interest, followed by ML models that classify or make higher-level inferences on high-quality feature data.

This layered approach offers:

- Interpretability

- Efficiency

- Robustness

- Scientific traceability

In short, ML models work better when they are fed by high-quality features derived from DSP algorithms based on scientific principles.

How AI Hijacked the Conversation

In part, this is our own fault. As an industry, we let algorithm become synonymous with black box AI. We stopped talking about the elegance of well-designed digital filters for cleaning sensor data.

Even the educational pipeline reflects this shift:

- Students focus more on TensorFlow rather than studying system modelling and applied mathematics.

- Conferences highlight AI papers, calling them innovation, while ignoring solutions based on DSP innovation.

- Changes in educational curricula and investor attitudes increasingly regard AI as the only form of innovation — ignoring traditional DSP altogether.

It would seem that the hype around AI has buried a lot of true innovation using traditional science and mathematics, which needs to change if we’re truly to reap the strength of RTEI for solving the massive societal challenges facing us in the years to come.

Leave a Reply

Want to join the discussion?Feel free to contribute!